Dedicated ROS 2 nodes for AI-enabled computer vision tasks

Published:

Topics: Open source tools, Edge AI, Open machine vision

When working on AI and computer vision customer projects we are often tasked with implementing complex software that uses multiple sources of information handled by different analysis and processing algorithms. The decision process in these solutions is based on the results of deep neural networks performing tasks such as object detection, classification, tracking or recognition. These tasks can then be analyzed and used to trigger actions, e.g. moving a camera, notifying the system, or passing the collected data to other algorithms or devices for further analysis.

For implementing such multi-task applications, we often use the open source ROS 2 (Robot Operating System) framework to create a distributed network of modular, self-contained applications called nodes, communicating with each other in one of the following patterns:

- Client-server - a client sends a request, the server processes it and sends back a response with results

- Publisher-subscriber - a publisher sends a message on a particular topic, and all nodes subscribing to the topic receive the message.

With experience spanning many projects with overlapping needs, we were able to isolate the most common use cases, for which we decided to create a collection of tailored open source ROS 2 packages, starting with two nodes we will introduce in this blog note, a camera node for adjusting camera settings and capturing frames, and a GUI node for visualizing data from ROS 2 topics and services. We will also demonstrate testing and prototyping capabilities for AI-enabled applications in ROS 2 made possible through integration with Antmicro’s open source Kenning framework.

Modular solutions based on ROS 2

ROS 2 is a set of tools and libraries that allow for implementation of complex robotics solutions in the form of small, configurable, modular programs communicating with each other. Modular solutions like this are easier to maintain, less prone to errors, and parallelize better by design than a monolithic application tends to be. Another advantage lies in their scalability - adding new algorithms, sensors, and effectors is usually a matter of implementing a new node or reusing an existing one (e.g. with different parameters). Including such a node in an existing communication flow also requires fewer code adjustments and offers additional perks such as execution in parallel, obtaining data from other parts of the system or synchronization with the rest of the software. We often take advantage of these properties as part of various projects, where we either implement an application using ROS 2 from scratch, or rework an existing, overcomplicated solution to split its functionalities into separate nodes.

What is more, ROS 2 communication can also work between devices - it is a distributed solution where nodes in the same domain find each other and communicate without any centralization maintaining this connection.

Contrary to common belief, ROS 2 is not constrained to Linux and can also be used with micro-ROS to allow communication with microcontrollers running an RTOS like Zephyr (e.g. parsing data from accelerometers, or controlling a servo), which you can read about in more detail in a previous blog note.

Camera Node

Computer vision is a huge driver for embedded devices based on high-performance platforms like Jetson AGX Orin or Jetson Orin NX. In order to simplify camera handling in our ROS 2 projects, we created the camera-node package. This node utilizes Antmicro’s grabthecam utility which is a hackable, small, V4L2-based library for manipulating camera settings and collecting frames. You can read more about this tool in another blog note.

Camera-node, thanks to grabthecam, can work with various cameras and can be easily adjusted to a specific use case.

It extracts settings directly from camera drivers, and provides them as ROS 2 parameters, allowing for controlling settings from launch files, ROS 2 tools, and even other nodes, without being limited to predefined parameters. Camera-node grants full control over grabbing frames, letting users synchronize frame collecting with other actions and easily adjust settings (via grabthecam) to work with less popular color formats or pixel value types (if you find yourself working a lot with color formats, do check out our Raviewer tool as well - read more about it in a dedicated blog note).

GUI Node

Since many of the cases we work on can benefit from a UI illustrating what happens within an application and granting control over it, we are also introducing a GUI node. The gui-node package is a library that lets developers easily integrate existing ROS 2 topics and services into a GUI application. This project utilizes ImGui as its GUI library and the Vulkan API as its backend, allowing for GPU-enabled rendering, where GPU-equipped platforms (e.g. desktops, NVIDIA Jetson platforms, etc.) can efficiently render graphics, including 3D scenes.

The GUI Node’s architecture is built upon the interaction of Widgets and RosData-like objects. Widgets are objects rendered by the GUI node, which dictate what new windows in the GUI should look like, how to interact with them, and how to render a current view based on the last remembered state in an associated RosData object. Widgets can be used for drawing tables, buttons, text prompts, frames (e.g. from the Camera node) and more, depending on the use case. Communication with the rest of the ROS 2 ecosystem is performed using so-called RosData classes - they interact with ROS2 topics or services and store the last remembered state in a custom format that can be conveniently used in Widgets.

GUI node provides a ready-to-use backbone for efficient GUI implementation - creating a functional GUI is a matter of writing callbacks for handling certain topics or services, using pre-existing Widgets for rendering most of the ROS 2 events, or adding new, relatively small snippets of ImGui drawing methods to create a new Widget. With this implementation, developers can jump right into visualizing information coming from ROS 2 without the need to worry about handling Vulkan, ROS 2 logic preparation, and maintaining the ImGui context.

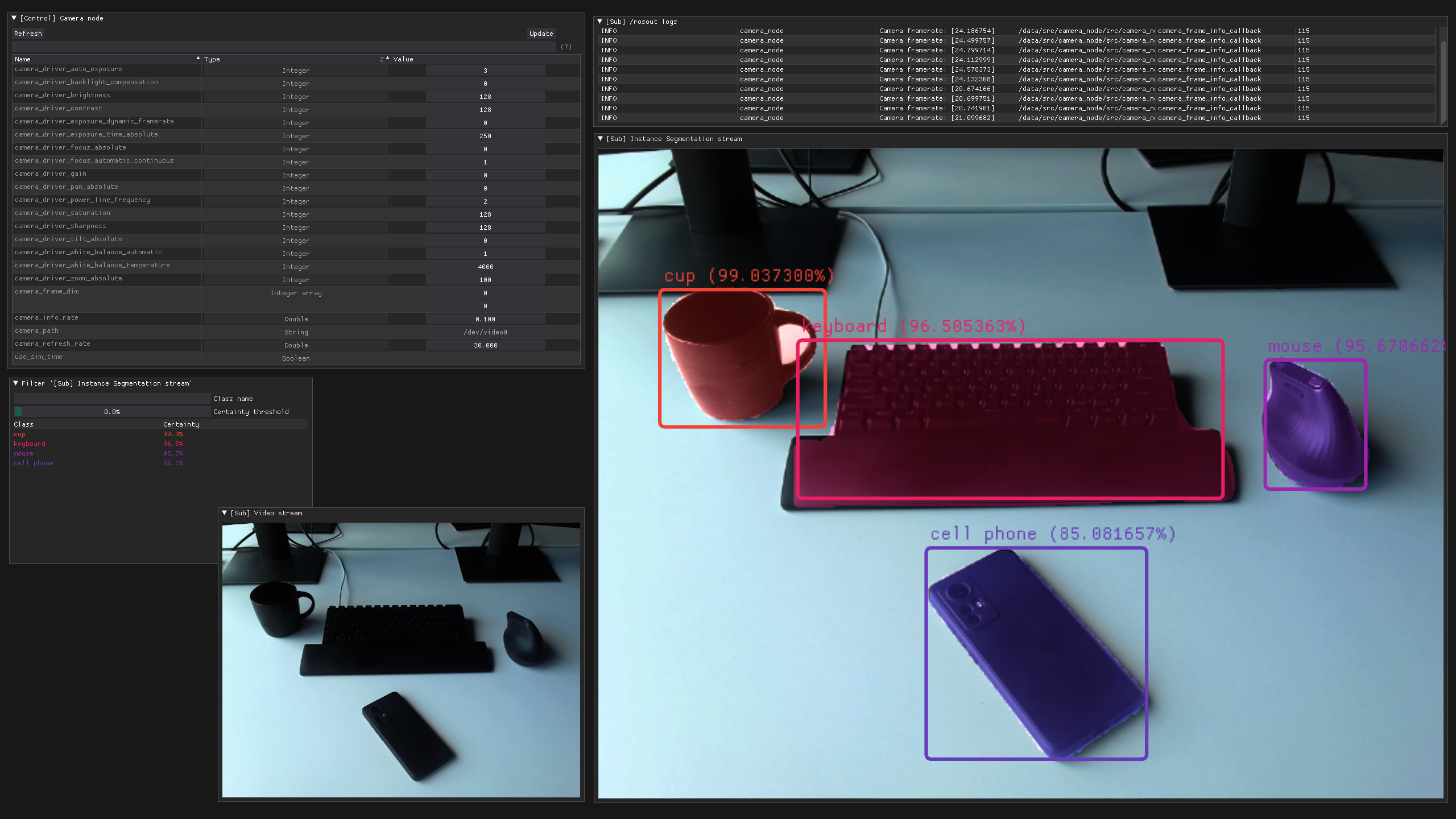

GUI Node offers several pre-implemented widgets out of the box:

VideoWidget- displays a video stream from a given topicDetectionWidget- displays detection predictions with a video stream from a given topicRosoutWidget- displays ROS 2 log informationControlWidget- provides GUI to view and modify parameters for a given ROS 2 nodeStringWidget- displays std_msgs::String messages

Kenning in the ROS 2 ecosystem

Kenning is a framework intended to simplify development of machine learning applications. It allows users to test and deploy ML pipelines on a variety of platforms (edge devices included), regardless of the underlying frameworks.

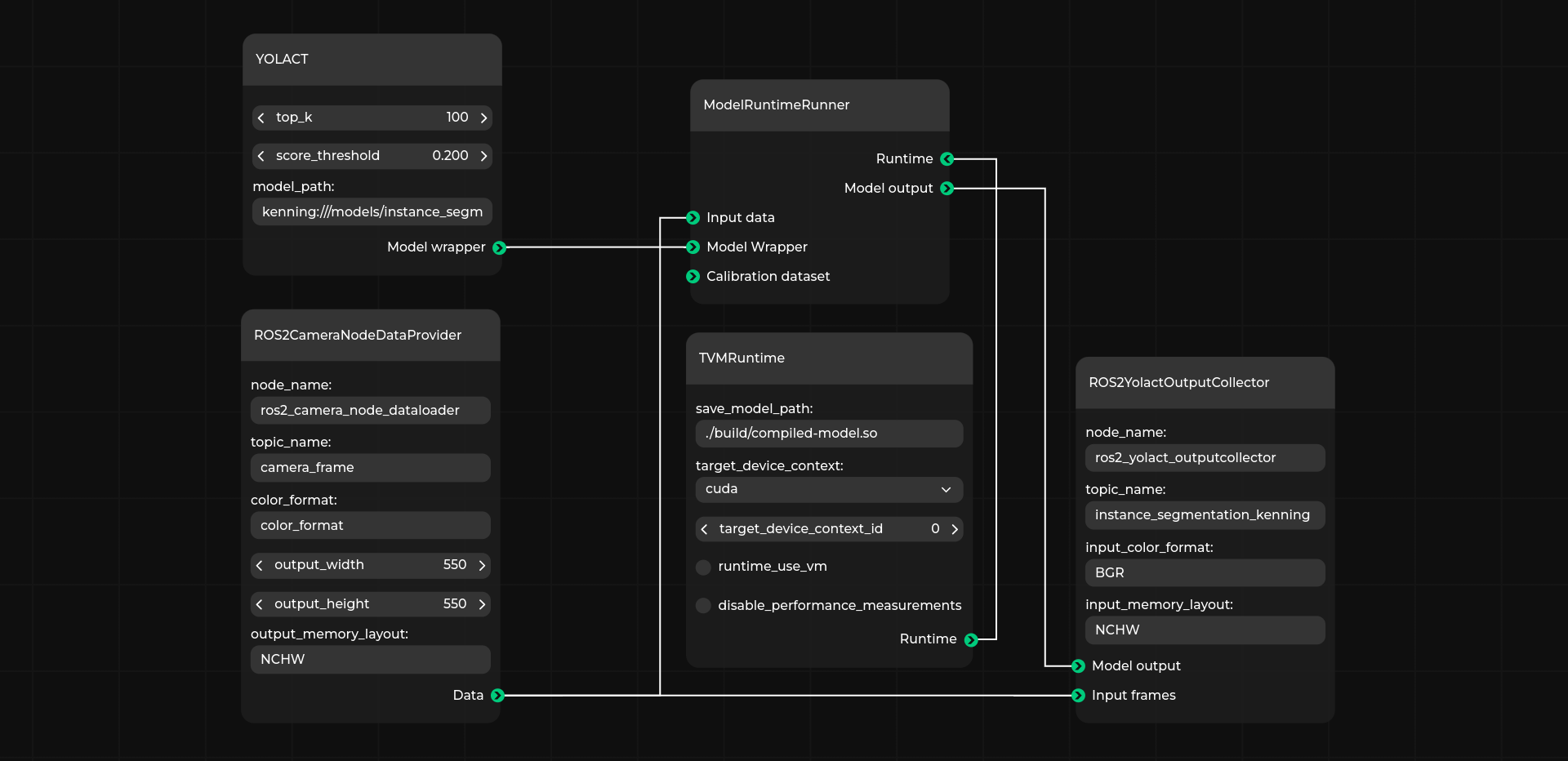

Kenning aims at quick prototyping of AI-enabled applications with KenningFlows, where specific stages of a pipeline are represented with Runners in a single JSON-based definition file. You can observe ROS 2 nodes working in tandem with Kenning in the demo available in the examples directory of the ros2-gui-node repo. For the purposes of the demo, DataProvider and OutputCollector KenningFlow runners based on ROS2 were implemented, enabling interaction with the Camera node and the GUI node from Kenning’s level.

When executed, the demo uses the GUI node to provide an instance segmentation visualization of a Kenning Runtime, and Camera node for streaming and access to camera settings. Note that the demo requires a CUDA-enabled NVIDIA GPU for inference acceleration and a camera for streaming frames to the Kenning Runtime. In the image below, you can see the runtime’s visualization in Pipeline Manager, Antmicro’s GUI tool for visualizing, editing, validating, and running AI flows in Kenning. You can read more about the tool in a previous blog note.

Develop AI-enabled robotics solutions with ROS 2 and Kenning

Are you interested in extending the use cases of your existing ROS 2-based solutions with AI-enabled computer vision, or building robotics systems leveraging Machine Learning from scratch? Take advantage of Antmicro’s open source toolkits and years of experience providing commercial support to customers spanning various industries, and reach out at contact@antmicro.com to discuss your project.