Deploying hybrid AI solutions on edge device fleets

Published:

Topics: Edge AI, Open cloud systems

AI algorithms often require significant processing power typically associated with data centers. However, privacy concerns, latency and security considerations, together with increased compute capabilities of edge devices, are making local AI data preprocessing increasingly common.

Some examples include when video data has to be anonymized for legal compliance, or processed immediately after capture because of safety considerations. In effect, most real-world applications are a mix between cloud and local processing, and while helping our customers develop their products Antmicro often addresses both sides of the equation.

Typically, edge devices provide increased bandwidth and decreased latency, but are not powerful enough to handle advanced, AI-based data processing. In such cases there is a need for mechanisms to store data, upload it to the cloud and deploy some computing tasks on more powerful machines. Cloud computing, on the other hand, entails virtually limitless processing power on demand, but at the price of reduced bandwidth and higher latency.

Finding the golden mean between the two worlds is key to achieving optimal performance, and at Antmicro we specialize in creating tailored, cross-disciplinary solutions, based on solid foundations of open source, such as the edge-to-cloud AI systems for device fleets we will describe in this note.

Distributing AI between edge and cloud: applications

It’s not always easy to determine which part of the processing should be deployed to cloud machines and which can be performed on the edge. A deep understanding of various edge AI alternatives and their capabilities is needed, and at Antmicro we have been honing this knowledge based on projects involving various hardware ranging from NVIDIA Jetson, Qualcomm Snapdragon, NXP’s i.MX series, Google Coral and other dedicated AI accelerators and building dedicated tools to enable explainable, measurable and portable AI.

Kenning, our open source framework for deploying deep learning models on the edge, helps build portable and reusable AI pipelines that are easy to test, benchmark and deploy on target hardware. The recently introduced modular runtime flows enable efficient development and execution of tested and optimized models on device. The modular nature of the runtime allows the user to easily exchange and reuse modules for loading data from sensors and handling the results of ML models.

Knowing which data to keep and process locally is heavily driven by the application and hardware used. In some cases, spotty network coverage of a field-deployed device (e.g. in logistics or automotive applications) dictates that data should preferably be processed on the edge and/or stored for upload at specific intervals or in high-bandwidth locations.

At other times, regulations or other compliance requirements may impose strict restrictions on what can and cannot be done, e.g. anonymizing data is predominantly done on the edge device itself before data can be sent further. Increasing capabilities of edge devices enable new cases, and we often help innovative customers jump on the new opportunities created by the changing circumstances such as new privacy protection laws.

Data processing on the edge using open source, modular frameworks

For real-world applications, we often find ourselves implementing fairly complex local processing pipelines, which involve:

- Analyzing, aggregating and process raw data coming from various sensors, like camera, thermometer, gyroscope, accelerometer into formats feasible for further analysis or storage

- Controlling various aspects of systems, such as fan, light settings, camera, and other effectors to gather data or control the edge device behavior

- Running classic Computer Vision, Machine Learning or Deep Learning algorithms on pre-processed data, such as object detection and tracking, text recognition, object segmentation

- Processing data based on predictions, i.e. anonymizing sensitive data, sorting objects based on their type or quality

- Depending on connectivity, storing the processed data to be sent to the cloud

- Sending the aggregated, anonymized, overall processed data to the cloud

To address this complexity, we typically develop these kind of applications (which involve manipulation and coordination of various system components, algorithms, data processing and communication with remote parties) using the ROS(2) open source framework. This way, instead of creating a monolithic application handling everything listed above we can subdivide our solution into separate applications working in parallel, communicating with each other in a distributed way using broadcasts and services. This allows us to create lean, clean, modular and scalable solutions.

With edge platforms becoming ever more capable in terms of processing, we are able to use more and more advanced ML - and especially DL algorithms, and chain them into advanced AI solutions analyzing real-life data with outstanding results.

Edge data processing pipeline design

The rapidly evolving algorithms in the deep learning space require not only a good testing/benchmarking/deployment framework such as Kenning, but also the ability to manage and iterate over various versions of the algorithms working on deployed devices.

We have been helping customers in agriculture, robotics, factory automation and other areas to build AI pipelining frameworks and point-update mechanisms to keep their AI models up to date (this is on top of the typical complete system OTA update capabilities we often build for customers regardless of whether they are using deep learning).

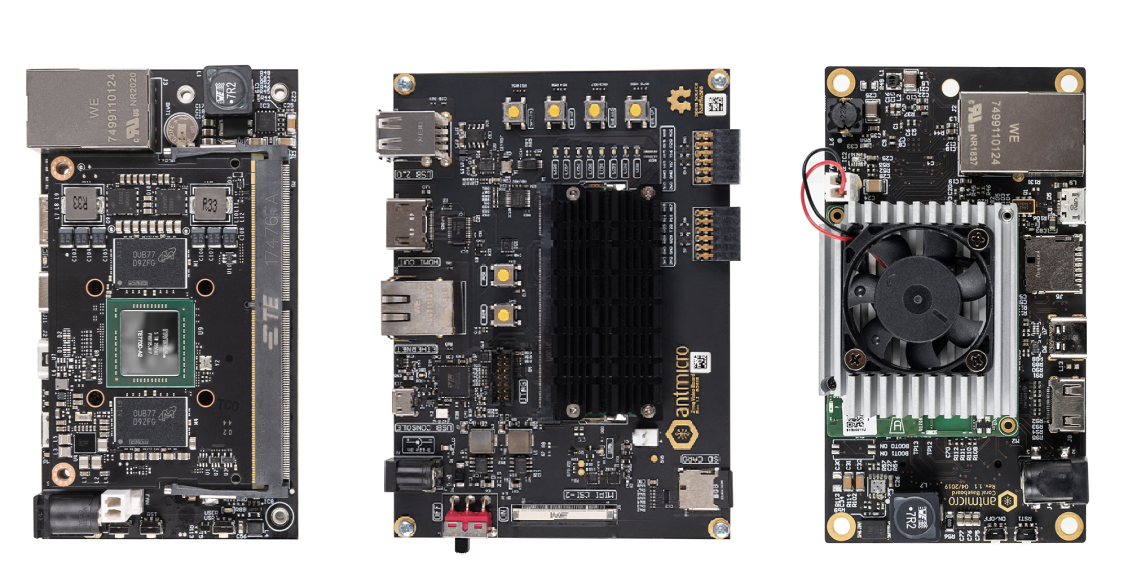

Our approaches are typically open source based vendor neutral, as we work with a wide array of SoM vendors to cater for different needs of the customer cases. We use SoMs as they decrease the complexity of the design and encourage reuse, two objectives which are very much in line with our open source approach.

Flexible integration of edge applications with the cloud

The fact that most real-world systems see some kind of split between local and cloud AI is reflected in the phenomenon that there is a specific kind of device that we end up building over and over again - an LTE-enabled gateway with local AI capabilities.

Often - but by far not always - based on such edge devices as NVIDIA Jetson or Google Coral, the complexity of such a project typically inevitably lies on the software side. The fact that the device operates as part of a larger system (many of our customers build entire fleets of devices, with thousands of devices deployed in the field where manual fixes are not an option) means that there are a number of areas to take care of:

- Cloud connectivity

- Data gathering, retention, backups etc.

- OTA updates

- Fleet management

- Cloud AI

- End-to-end CI and system testing

Starting from the connectivity aspect, the obvious downside of connections through e.g. LTE is that they can be spotty and occasionally only provide degraded bandwidth. Since the amount of data to be transferred and the way it is utilized vary heavily by application, special ways to handle this unreliability have to be implemented on both the application and cloud side to make sure the end-to-end application is robust.

For example, data used for non-critical tasks may be stored locally and transmitted to the cloud when a driverless vehicle returns to a service facility or base, when no LTE is present the edge device can automatically switch the AI pipeline to a less demanding local-only one with fewer features etc. Data can be buffered, split into chunks and the lossiness of the connection accounted for on the application level.

On the cloud side, it is important to make sure that data is properly stored and backed up, without generating excessive costs. Here, the hybrid cloud approach offered by Antmicro means we can help customers decide how to structure their cloud infrastructure and use the right balance between instantly scalable but somewhat costly (especially if improperly used) public cloud solutions and private infrastructure.

We make sure those setups are reproducible an traceable using Infrastructure as Code principles with the help of frameworks such as Terraform or Ansible.

Cloud connectivity and data processing

Once the data is ready on the device, it can be sent to the cloud using mechanisms such as:

- MQTT - a lightweight publish-subscribe protocol for IoT applications, used mostly for sending simple sensor data, e.g. GPS location and accelerometer for cars, humidity and temperature for greenhouse control devices

- Cloud Storage - used for larger files and data streams such as video feeds, images, cloud point readouts, etc.

After checking the correctness of the data transfer, i.e. by verifying the MD5 checksums calculated from data before and after the transaction, the data can be stored and processed further in the cloud.

Usually, the described process of sending data is similar across various cloud providers, such as Google Cloud IoT Core, AWS IoT Core or Azure IoT - and we try to enable portable, open source interfaces which allow our customers to select what fits them best, and build local/hybrid cloud setups where needed.

Data sent over MQTT topics or storage buckets can be used to oversee the work of the fleet, control its work and collect data for improving the software and AI algorithms. It can be also subjected to more computationally-demanding AI algorithms, which could not be deployed on IoT devices. For example, Google Cloud provides the so-called Vision API that can be used to deploy demanding models on data uploaded to the cloud storage. The uploaded data can be annotated, categorized or described using Deep Learning models. Ultimately, the data delivered by the edge device can be enriched with more sophisticated and structured information coming from cloud processing pipelines.

Hardware-oriented innovation

While developing such edge-to-cloud applications, we can leverage the close relationships we have with many of the world’s largest technology companies, as we routinely perform R&D and innovation work together to build up new solutions and application areas in the relatively nascent hardware space rather than just using what’s already there.

For example, we are working with Google Cloud towards building hardware-centric cloud solutions, ranging from end-to-end AI systems which leverage Google’s infrastructure and our scalable IoT system testing tools such as Renode to cloud-assisted ASIC and FPGA development and prototyping.

We have built dozens of OTA and fleet management systems for applications as diverse as access control, industrial monitoring, smart city, logistics, agriculture, etc. incorporating and building many open source building blocks which allow us to offer a customized approach which at the same time bases on well-known “standards”.

Some interesting requests from our customers building LTE-connected gateways deployed in the field are partial (delta) updates (saving bandwidth as compared to full system upgrades), managing groups of devices deployed on different sites, etc. - we will describe this in a separate blog note in the nearest future.

Comprehensive services for edge-to-cloud AI systems

Antmicro’s ability to create advanced edge implementations for device fleets, including building both the hardware setup, the AI running on top of it and the entire device management and the update flow is highly valued by our customers. Our engineering services involve creating royalty free, vendor neutral systems that can connect to both public and private (on-premise) cloud. We lend our experience and cross-disciplinary expertise to customers requiring not just individual solutions, but a complete, modular and transparent system - provided together with full documentation and a clear explanation of how it works.